With the advent of machine learning and artificial intelligence, the iOS SDK already comes with a number of frameworks for developers to develop apps with machine learning-related features. In this tutorial, let’s explore two built-in ML APIs for converting text to speech and performing language detection.

Using Text to Speech in AVFoundation

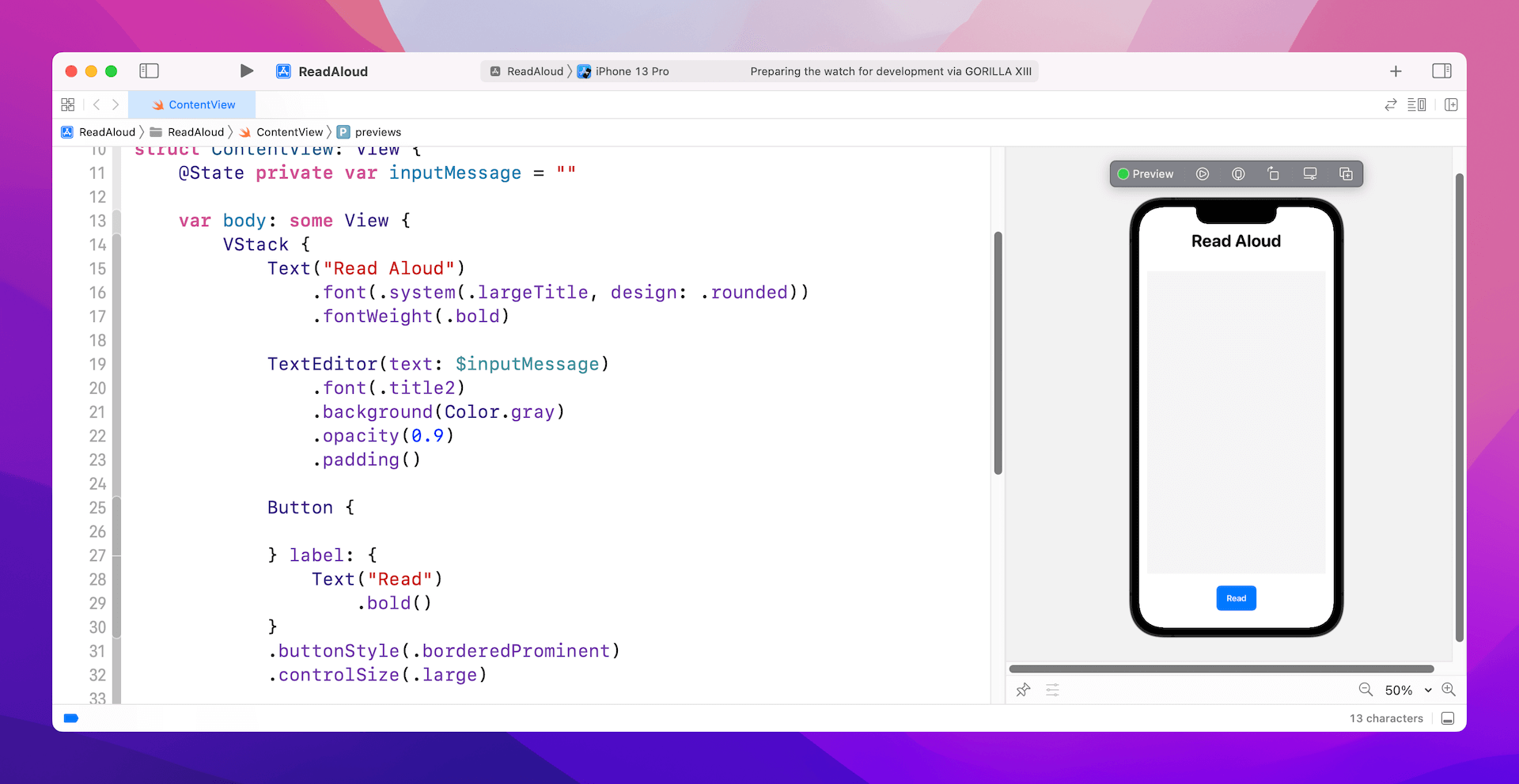

Let’s say, if we are building an app that reads users’ input message, you need to implement some sort of text-to-speech functions.

The AVFoundation framework has come with some text-to-speech APIs. To use those APIs, we have to first import the framework:

import AVFoundationNext, create an instance of AVSpeechSynthesizer:

let speechSynthesizer = AVSpeechSynthesizer()To convert the text message to speech, you can write the code like this:

let utterance = AVSpeechUtterance(string: inputMessage)

utterance.pitchMultiplier = 1.0

utterance.rate = 0.5

utterance.voice = AVSpeechSynthesisVoice(language: "en-US")

speechSynthesizer.speak(utterance)You create an instance of AVSpeechUtterance with the text for the synthesizer to speak. Optionally, you can configure the pitch, rate, and voice. For the voice parameter, we set the language to English (U.S.). Lastly, you pass the utterance object to the speech synthesizer to read the text in English.

The built-in speech synthesizer is capable of speaking multiple languages such as Chinese, Japanese, and French. To tell the synthesizer the language to speak, you have to pass the correct language code when creating the instance of AVSpeechSynthesisVoice.

To find out all the language codes that the device supports, you can call up the speechVoices() method of AVSpeechSynthesisVoice:

let voices = AVSpeechSynthesisVoice.speechVoices()

for voice in voices {

print(voice.language)

}Here are some of the supported language codes:

- Japanese – ja-JP

- Korean – ko-KR

- French – fr-FR

- Italian – it-IT

- Cantonese – zh-HK

- Mandarin – zh-TW

- Putonghua – zh-CN

In some cases, you may need to interrupt the speech synthesizer. You can call up the stopSpeaking method to stop the synthesizer:

speechSynthesizer.stopSpeaking(at: .immediate)Performing Language Identification Using Natural Language Framework

As you can see in the code above, we have to figure out the language of the input message before the speech synthesizer can convert the text to speech correctly. Wouldn’t it be great if the app can automatically detect the language of the input message?

The NaturalLanguage framework provides a variety of natural language processing (NLP) functionality including language identification.

To use the NLP APIs, first import the NaturalLanguage framework:

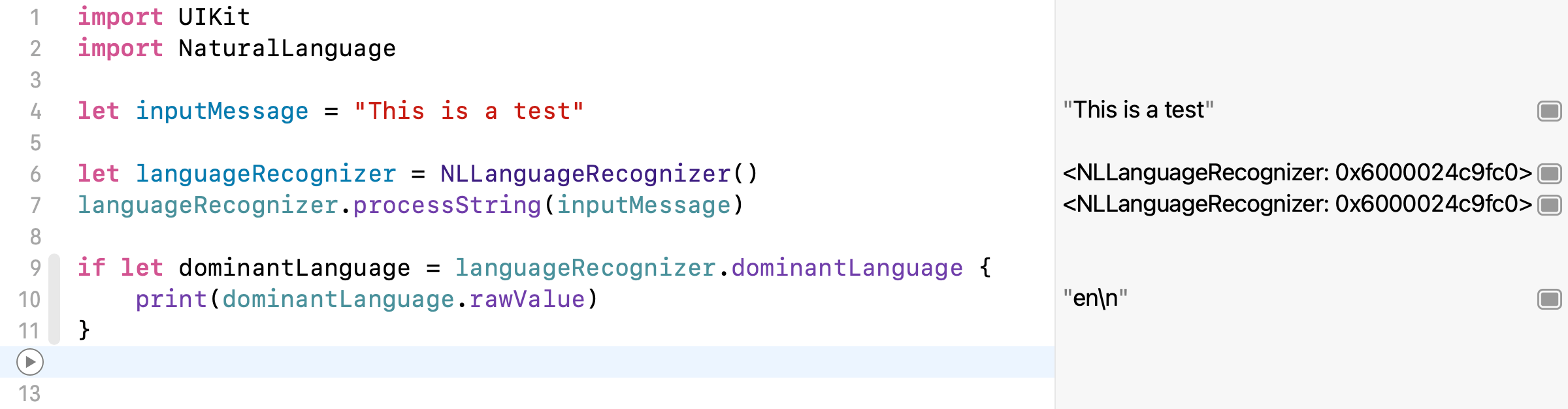

import NaturalLanguageYou just need a couple lines of code to detect the language of a text message:

let languageRecognizer = NLLanguageRecognizer()

languageRecognizer.processString(inputMessage)The code above creates an instance of NLLanguageRecognizer and then invokes the processString to process the input message. Once processed, the language identified is stored in the dominantLanguage property:

if let dominantLanguage = languageRecognizer.dominantLanguage {

print(dominantLanguage.rawValue)

}Here is a quick example:

For the sample, NLLanguageRecognizer recognizes the language as English (i.e. en). If you change the inputMessage to Japanese like below, the dominantLanguage becomes ja:

let inputMessage = "これはテストです"The dominantLanguage property may have no value if the input message is like this:

let inputMessage = "1234949485🎃😸💩🥸"Wrap Up

In this tutorial, we have walked you through two of the built-in machine learning APIs for converting text to speech and identifying the language of a text message. With these ML APIs, you can easily incorporate the text-to-speech feature in your iOS apps.